What is Explainable AI (XAI)?

Explainable AI (XAI) refers to the concept and set of techniques that aim to make artificial intelligence and machine learning models more transparent and understandable to humans. It addresses the challenge of "black-box" AI models, which can provide accurate predictions or decisions but lack the ability to explain how they arrived at those outcomes.

In many Artificial Intelligence (AI) systems, especially those powered by deep learning or complex algorithms, the decision-making process is often obscured, making it difficult for users and stakeholders to trust, validate, or interpret the results. This lack of transparency can lead to several issues, including:

- Lack of Trust: Users may be hesitant to rely on AI systems when they cannot understand why a particular decision was made.

- Regulatory Compliance: In some applications, there are legal requirements to provide explanations for AI-generated decisions (e.g., in healthcare or finance).

- Safety and Ethics: In critical domains like healthcare or autonomous vehicles, understanding the reasoning behind AI decisions is crucial for ensuring safety and ethical behavior.

- Bias and Fairness: Opacity in AI models can make it challenging to detect and mitigate biases, leading to potential unfair treatment of certain groups.

Explainable AI addresses these concerns by providing insights into how an Artificial Intelligence (AI) model arrives at its conclusions. It offers human-readable explanations for individual predictions or overall model behavior. By understanding the factors influencing the model's decision-making process, users can gain confidence in the system, detect potential biases, and diagnose issues if the model makes mistakes.

Why is XAI important?

XAI, or Explainable AI, is essential for several reasons. Firstly, it enhances trust and transparency in AI systems by enabling humans to understand how these complex models make decisions, especially in critical domains like healthcare and finance. Secondly, XAI provides valuable insights into AI models' decision-making processes, helping users identify and mitigate potential biases, leading to improved decision-making. Thirdly, it reduces the risk of Artificial Intelligence (AI) misuse by making it easier to detect and address errors or unintended consequences. Fourthly, XAI ensures compliance with regulations that require explanations for AI-driven decisions, such as the GDPR. Lastly, XAI enhances user acceptance as it empowers individuals to comprehend and trust AI systems that impact their lives profoundly.

XAI methods

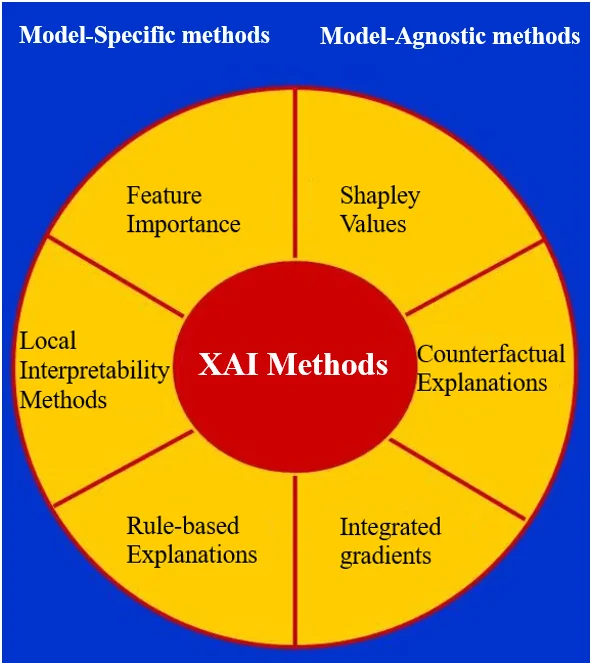

There are many different XAI methods, but they can be broadly categorized into two groups: model-specific methods and model-agnostic methods.

Model-specific methods

Model-specific methods are designed to explain a specific AI model. These methods typically work by analyzing the model's internal structure or by tracking the model's decision-making process. Some examples of model-specific methods include:

- Feature importance: This method identifies the features that are most important for the model's predictions.

- Local interpretability methods: These methods explain the model's predictions for a specific input instance.

- Rule-based explanations: These methods generate rules that describe the model's decision-making process.

Model-agnostic methods

Model-agnostic methods are designed to explain any Artificial Intelligence (AI) model, regardless of its internal structure. These methods typically work by perturbing the model's inputs and observing how the model's predictions change. Some examples of model-agnostic methods include:

- Counterfactual explanations: These methods generate explanations that show how a model's prediction would change if one or more of the input features were different.

- Shapley values: These methods quantify the contribution of each feature to the model's predictions.

- Integrated gradients: These methods provide a continuous explanation of the model's predictions.

Techniques used in XAI

There are various techniques used in XAI, ranging from simple feature importance methods, such as LIME and SHAP, to more complex approaches like surrogate models and layer-wise relevance propagation in deep neural networks. These methods aim to highlight the most influential features or patterns used by the AI model to make predictions, making it easier for humans to comprehend and validate the decision process.

Conclusion

Explainable AI is a crucial aspect of responsible Artificial Intelligence (AI) development, as it enhances the transparency, accountability, and trustworthiness of AI systems, making them more accessible and beneficial to users and society as a whole.