Decimal vs Double vs Float

In .NET, Decimal, Float, and Double are numeric data types used to represent decimal numbers with varying degrees of precision and range. Each of these data types has its unique characteristics, making them suitable for different scenarios based on precision requirements and memory constraints.

Decimal

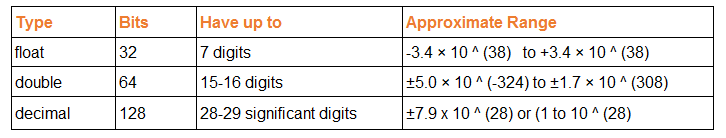

The Decimal data type in .NET is a high-precision floating-point type, also known as a "decimal floating-point." It is commonly used for financial and monetary calculations, where exact precision is crucial to avoid rounding errors. Decimal provides 28-29 significant digits of precision and a range from approximately ±1.0 x 10^-28 to ±7.9 x 10^28.

Float

The Float data type is a single-precision floating-point type, often used when a balance between precision and memory usage is necessary. Float provides 7 significant digits of precision and a range from approximately ±1.5 x 10^-45 to ±3.4 x 10^38. However, it may introduce rounding errors when dealing with very small or very large numbers due to its limited precision.

Double

The Double data type is a double-precision floating-point type, offering more significant digits and a larger range compared to Float. It provides 15-16 significant digits of precision and a range from approximately ±5.0 x 10^-324 to ±1.7 x 10^308. Double is often used for general-purpose numerical calculations that require a higher level of precision than Float.

Approximate Range

Accuracy

- Float is less accurate than Double and Decimal.

- Double is more accurate than Float but less accurate than Decimal.

- Decimal is more accurate than Float and Double.

Conclusion

The main differences between Decimal, Float, and Double in .NET are related to precision and range. Decimal offers the highest precision and is best suited for financial and monetary calculations. Float provides a balance between precision and memory usage, while Double offers higher precision than Float and is commonly used for general-purpose numerical calculations. When choosing between these data types, it's essential to consider the specific requirements of your application and the level of precision needed for accurate results.

- What is the root class in .Net

- How to set DateTime to null in C#

- How to convert string to integer in C#

- What's the difference between String and string in C#

- What is the best way to iterate over a Dictionary in C#?

- How to convert a String to byte Array in c#

- Detecting arrow keys in winforms C# and vb.net

- how to use enum with switch case c# vb.net

- Passing Data Between Windows Forms C# , VB.Net

- How to Autocomplete TextBox ? C# vb.net

- Autocomplete ComboBox c# vb.net

- How to convert an enum to a list in c# and VB.Net

- How to Save the MemoryStream as a file in c# and VB.Net

- How to parse an XML file using XmlReader in C# and VB.Net

- How to parse an XML file using XmlTextReader in C# and VB.Net

- Parsing XML with the XmlDocument class in C# and VB.Net

- How to check if a file exists in C# or VB.Net

- How to Convert String to DateTime in C# and VB.Net

- How to Set ComboBox text and value - C# , VB.Net

- How to sort an array in ascending order , sort an array in descending order c# , vb.net

- Convert Image to Byte Array and Byte Array to Image c# , VB.Net

- How do I make a textbox that only accepts numbers ? C#, VB.Net, Asp.Net

- What is a NullReferenceException in C#?

- How to Handle a Custom Exception in C#

- Throwing Exceptions - C#

- Difference between string and StringBuilder | C#

- How do I convert byte[] to stream C#

- Remove all whitespace from string | C#

- How to remove new line characters from a string in C#

- Remove all non alphanumeric characters from a string in C#

- What is character encoding

- How to Connect to MySQL Using C#

- How convert byte array to string C#

- What is IP Address ?

- Run .bat file from C# or VB.Net

- How do you round a number to two decimal places C# VB.Net Asp.Net

- How to break a long string in multiple lines

- How do I encrypting and decrypting a string asp.net vb.net C# - Cryptography in .Net

- Type Checking - Various Ways to Check datatype of a variable typeof operator GetType() Method c# asp.net vb.net

- How do I automatically scroll to the bottom of a multiline text box C# , VB.Net , asp.net

- Difference between forEach and for loop

- How to convert a byte array to a hex string in C#?

- How to Catch multiple exceptions with C#