Decision Tree Algorithm in Machine Learning

A decision tree is a non-parametric supervised learning algorithm used for classification and regression tasks. It represents a hierarchical structure that resembles a tree, where internal nodes represent decision points, branches represent the possible outcomes of each decision, and leaf nodes represent the final predictions or classifications. Decision trees are popular for their simplicity, interpretability, and ability to handle both categorical and numerical data.

How do Decision Trees Work?

Decision trees work by recursively partitioning the data into smaller and smaller subsets. Each partition is based on a decision rule, which is a question about a particular feature of the data. The goal is to partition the data in such a way that the data points in each partition are as similar as possible, and the data points in different partitions are as different as possible.

The leaves of the decision tree represent the final decision or prediction. Each leaf is labeled with the most likely class for classification tasks or the average value for regression tasks.

Types of Decision Trees

There are two main types of decision trees:

- Classification Trees: Classification trees are used to predict the class of a new data point. The classes are mutually exclusive and exhaustive, meaning that each data point belongs to exactly one class.

- Regression Trees: Regression trees are used to predict a numerical value for a new data point. The numerical value is continuous, meaning that it can take on any value within a certain range.

Example of Decision Tree Classification

Suppose we want to classify fruits based on their characteristics (color, size, and texture). We have a training dataset of fruits with their characteristics and corresponding species (e.g., apple, orange, banana). To classify a new fruit, we would:

- Start at the root node and follow the branches based on the fruit's characteristics.

- At each internal node, compare the fruit's characteristic to the value at the node and follow the corresponding branch.

- Reach the leaf node, which represents the predicted species of the new fruit.

Example of Decision Tree Regression

Suppose we want to predict the house price based on its size, location, and amenities. We have a training dataset of houses with their characteristics and corresponding prices. To predict the price of a new house, we would:

- Start at the root node and follow the branches based on the house's characteristics.

- At each internal node, compare the house's characteristic to the value at the node and follow the corresponding branch.

- Reach the leaf node, which represents the predicted price of the new house.

Python Implementation of Decision Tree

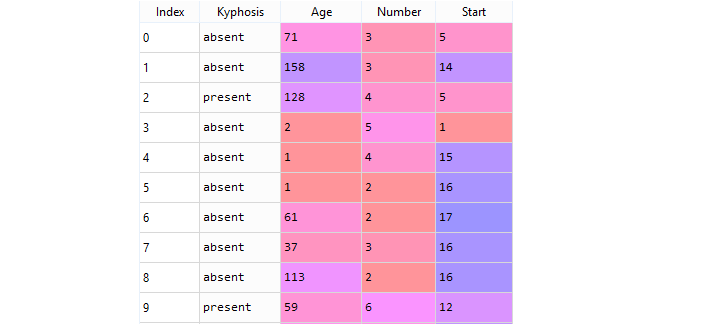

About the Dataset - Kyphosis

The data frame with 81 rows and 4 columns represents data on patients who have undergone corrective spinal surgery, and the data includes information about the medical condition kyphosis, which causes a forward curving of the back. Kyphosis can occur at any age but is most common in older women. The data likely contains various features related to the patients' condition, treatment, and outcomes, which can be analyzed to gain insights into the effects of the corrective spinal surgery on kyphosis patients.

This file is meant for testing purposes only, download it from here: kyphosis.csv .

This data-frame contains the following columns:

- Kyphosis : A factor with levels absent present indicating if a kyphosis was present after the operation.

- Age : In months.

- Number : The number of vertebrae involved.

- Start : The number of the first vertebra operated on.

Sample dataset

To access the complete source code for the Python Implementation of Decision Tree algorithm with explanation, click on the following link: Decision Tree Example

Key Concepts of Decision Trees

Tree Structure: The Foundation of Decision Trees

Decision trees are represented using a hierarchical tree structure, where each node represents a decision or a split in the data. This structure allows the algorithm to make a series of decisions based on the features of the data points, leading to a final prediction or classification.

Branches and Splits: Exploring into the Decision-Making Process

Branches represent the possible outcomes of a decision at each node. These branches are created by splitting the data based on the values of the features. The splitting criteria are determined by evaluating the information gain or entropy of each feature, ensuring that the splits maximize the information gained at each step.

Leaves: Reaching the Final Outcome

Leaf nodes are the terminal nodes in the decision tree, representing the final predictions or classifications. These leaves contain the most specific information about the target variable based on the decisions made along the path from the root node.

Root Node: The Starting Point of Decision-Making

The root node is the topmost node in the decision tree, representing the initial decision point. It is responsible for splitting the entire dataset into smaller subsets based on the most informative feature.

Internal Nodes: Intermediate Decisions along the Path

Internal nodes represent intermediate decisions in the decision-making process. They receive data from their parent node and further split the data based on specific conditions. Each internal node contributes to refining the predictions or classifications as the data progresses through the tree structure.

Leaf Nodes: Reaching the Final Classification or Prediction

Leaf nodes are the final nodes in the decision tree, representing the predicted outcome or classification for a given data point. They provide the most specific information about the target variable based on the decisions made along the path from the root node.

Pruning: Preventing Overfitting and Enhancing Generalizability

Pruning is the process of selectively removing unnecessary branches from the decision tree. This technique helps prevent overfitting, which occurs when the tree captures too much detail from the training data and fails to generalize well to new, unseen data. Pruning simplifies the tree, reducing its complexity and improving its overall performance.

Entropy and Information Gain: Guiding the Splitting Process

Entropy and information gain are measures used to select the best attributes for splitting at each node. Entropy measures the impurity or randomness in the data, while information gain measures the reduction in entropy achieved by splitting the data based on a particular feature. Choosing the feature with the highest information gain ensures that the splits maximize the information gained at each step.

Decision Rules: Unveiling the Relationship between Features and Target Variable

The path from the root node to a leaf node represents a decision rule, providing insights into the relationship between the features of the data points and the target variable. These decision rules can be extracted from the tree and interpreted to understand the factors that contribute to the predictions or classifications.

Limitations of Decision Trees

Prone to Overfitting

Decision trees are susceptible to overfitting, a condition where the model performs extremely well on the training data but poorly on unseen data. This occurs when the tree becomes too complex and memorizes the specific patterns and noise in the training data, failing to generalize to new situations. Overfitting is particularly problematic for decision trees because they are recursive algorithms that can easily create very deep and complex trees.

To mitigate overfitting, several techniques can be employed:

- Pruning: Pruning involves removing unnecessary branches from the tree to reduce its complexity. This helps prevent the tree from overfitting to the training data by removing the overly specific splits that may not generalize well to unseen data.

- Regularization: Regularization techniques, such as limiting the maximum depth of the tree or penalizing complex trees, can also help control overfitting. These techniques encourage the tree to focus on simpler, more general patterns instead of memorizing the training data.

Handling Continuous Features

Decision trees are designed to handle categorical features, where each data point belongs to a specific category. However, they may struggle to effectively handle continuous features, which represent numeric values along a spectrum. Decision trees typically discretize continuous features into bins or categories, which may introduce noise and reduce the accuracy of the predictions.

To address this limitation, several techniques can be employed:

- Binning: Binning involves dividing the range of a continuous feature into a set of discrete bins or categories. This allows the decision tree to treat the continuous feature as a categorical variable, while still retaining some of the underlying information.

- Feature Transformation: Feature transformation techniques, such as normalization or standardization, can be applied to continuous features to scale them to a similar range and improve the effectiveness of decision trees.

Computational Complexity

The computational complexity of decision trees increases significantly as the number of features and data points grows. This can make it impractical to train and use decision trees on large datasets, as the computational requirements become prohibitive.

To manage computational complexity, several techniques can be employed:

- Feature Selection: Feature selection methods can be used to identify and remove irrelevant or redundant features, reducing the dimensionality of the data and improving the computational efficiency of decision trees.

- Decision Tree Ensembles: Decision tree ensembles, such as random forests or bagging, combine multiple decision trees to improve the overall accuracy and reduce overfitting. This approach can effectively handle large datasets and provide more robust predictions.

Conclusion

Decision trees are powerful and versatile machine learning algorithms for classification and regression tasks. Their interpretability, simplicity, robustness, and ability to provide insights into feature importance make them valuable tools for a wide range of applications. However, it's essential to carefully consider the potential limitations of decision trees and address them appropriately to ensure reliable and accurate results.