What is the K-Nearest Neighbor(KNN) algorithm?

K-Nearest Neighbor (KNN) is a non-parametric, instance-based learning algorithm used for classification and regression tasks. It operates by considering the proximity of data points to classify a new data point. Unlike other machine learning algorithms that learn a model from the training data, KNN utilizes the training data directly for classification or regression.

How K-Nearest Neighbor(KNN) Works

The K-NN algorithm works by identifying the k nearest neighbors in the training set for a given new data point. The k nearest neighbors are the k data points in the training set that are most similar to the new data point, based on a distance metric such as Euclidean distance.

Once the k nearest neighbors have been identified, the class or value of the new data point is predicted by taking the majority vote or averaging the values of the k nearest neighbors. For classification tasks, the class with the most votes among the k nearest neighbors is assigned to the new data point. For regression tasks, the average value of the k nearest neighbors is assigned to the new data point.

Classification with KNN

In classification tasks, KNN assigns the new data point to the class that is most prevalent among its k nearest neighbors. This means that the new data point is assigned to the class that has the most representatives among its k nearest neighbors.

Regression with KNN

In regression tasks, KNN predicts the value of the new data point by averaging the values of its k nearest neighbors. This means that the predicted value is an average of the values of the k data points that are most similar to the new data point in the feature space.

Choosing the Value of K

The value of K is a hyperparameter that needs to be chosen carefully. If K is too small, the model may be overfit to the training data and may not generalize well to new data. If K is too large, the model may be underfit to the training data and may not capture the underlying patterns in the data.

There are a number of different ways to choose the value of K. One way is to use a grid search, which involves trying different values of K and evaluating the performance of the model on each value. Another way is to use a cross-validation technique, which involves splitting the training data into multiple folds and using each fold as a hold-out set to evaluate the performance of the model on different values of K.

Example of KNN Classification

Suppose we want to classify a new flower based on its petal length and petal width. We have a training dataset of flowers with their petal measurements and corresponding species (e.g., Iris setosa, Iris versicolor, Iris virginica). To classify a new flower, we would:

- Choose the value of k, the number of nearest neighbors to consider.

- Calculate the Euclidean distance between the new flower's petal measurements and each flower in the training dataset.

- Identify the k nearest neighbors based on the smallest distances.

- Assign the new flower to the species that is most prevalent among its k nearest neighbors.

Example of KNN Regression

Suppose we want to predict the house price based on its size and location. We have a training dataset of houses with their size, location, and corresponding prices. To predict the price of a new house, we would:

- Choose the value of k, the number of nearest neighbors to consider.

- Calculate the Euclidean distance between the new house's size and location and each house in the training dataset.

- Identify the k nearest neighbors based on the smallest distances.

- Predict the price of the new house by averaging the prices of its k nearest neighbors.

Python implementation of the KNN algorithm

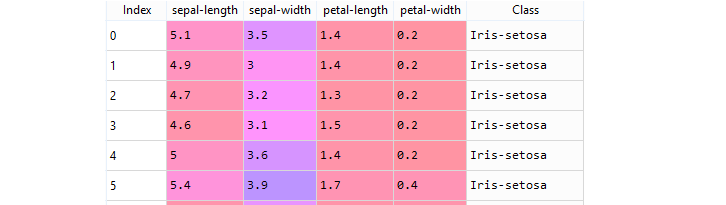

Importing the Dataset (Iris data)

It is a dataset that measures sepal-length, sepal-width, petal-length, and petal-width of three different types of iris flowers: Iris setosa, Iris virginica, and Iris versicolor. The task is to predict the "Class" to which these plants belong.

Sample dataset

To access the complete source code for the Python implementation of the KNN algorithm with explanation, click on the following link: K-Nearest Neighbor(KNN) Example

Key Concepts of K-Nearest Neighbor(KNN)

Non-Parametric Nature of KNN

Unlike parametric machine learning algorithms, which make assumptions about the underlying data distribution (e.g., linear regression assumes a linear relationship between variables), KNN is non-parametric. This means that KNN does not make any assumptions about the data distribution, making it a versatile algorithm that can be applied to a wide range of datasets without the need for prior knowledge of the data distribution.

Instance-Based Learning

KNN is an instance-based learning algorithm, meaning that it relies on the similarity of data points in the training data to make predictions for new data points. It doesn't explicitly learn a model from the training data; instead, it utilizes the training data directly for classification or regression.

Identifying Nearest Neighbors

The core of KNN lies in identifying the k nearest neighbors to a new data point. The value of k, known as the hyperparameter, is chosen by the user and determines the number of nearest neighbors to consider. The nearest neighbors are typically determined using a distance metric, such as the Euclidean distance, which measures the geometric distance between two data points in the feature space.

Limitations of K-Nearest Neighbor(KNN)

Sensitivity to Irrelevant Features

KNN is susceptible to the influence of irrelevant or redundant features in the data. These features can add noise and distract the algorithm from the truly relevant factors that contribute to the classification or prediction. When irrelevant features are present, the k nearest neighbors may not provide accurate insights into the underlying relationships between variables, leading to reduced accuracy and generalizability.

To mitigate this limitation, feature selection techniques can be employed to identify and remove irrelevant or redundant features before applying KNN. Feature selection methods can help reduce the dimensionality of the data, focusing on the most informative features and improving the performance of KNN.

Computational Complexity with Increasing Data Size

As the number of data points in the training dataset increases, the computational complexity of KNN grows significantly. This is because KNN requires calculating the distance between the new data point and each data point in the training set to identify the k nearest neighbors. This computation becomes increasingly time-consuming and resource-intensive as the data size grows.

To address this limitation, techniques like data sampling or dimensionality reduction can be employed to reduce the size of the training data while preserving the essential information. These methods can significantly reduce the computational burden of KNN, making it more practical for large datasets.

Choosing the Appropriate Value of k

The choice of k, the number of nearest neighbors to consider, is a crucial factor in the performance of KNN. Selecting an inappropriate value of k can lead to underfitting or overfitting. Underfitting occurs when k is too small, and the model is unable to capture the complexity of the data. Overfitting occurs when k is too large, and the model memorizes the training data, including noise and random fluctuations, resulting in poor generalizability.

To determine the optimal value of k, techniques like cross-validation can be used. Cross-validation involves evaluating the model's performance on multiple subsets of the data, providing insights into the impact of different k values. By selecting the k that yields the best performance across these subsets, the model can be optimized for

Conclusion

KNN is a powerful and versatile machine learning algorithm for classification and regression tasks. Its simplicity, interpretability, robustness, and non-parametric nature make it a valuable tool for a wide range of applications. However, it's essential to carefully consider the potential limitations of KNN and address them appropriately to ensure reliable and accurate results.