Ordinary Least Squares (OLS) Regression

Ordinary Least Squares (OLS) Regression is a statistical method for estimating the unknown parameters in a linear regression model by minimizing the sum of the squared residuals (the difference between the observed dependent variable values and the predicted values). It is one of the most commonly used machine learning algorithms due to its simplicity, interpretability, and robustness.

How does OLS Regression Work?

OLS Regression works by fitting a linear equation to the data. The linear equation is represented by the following formula:

- y is the dependent variable

- ß0 is the intercept

- ß1 is the coefficient for the independent variable x

- x is the independent variable

- e is the error term

The goal of OLS Regression is to find the values of ß0 and ß1 that minimize the sum of the squared errors (SSE). The SSE is represented by the following formula:

- Ŷ is the predicted value of the dependent variable

- y is the actual value of the dependent variable

The values of ß0 and ß1 that minimize the SSE are called the least squares estimates. The least squares estimates can be found using a variety of methods, such as the matrix inversion method or the gradient descent method.

Ordinary Least Squares Example:

Consider the Restaurant data set: restaurants.csv . A restaurant guide collects several variables from a group of restaurants in a city. The description of the variables is given below:

| Field | Description |

|---|---|

| Restaurant_ID | Restaurant Code |

| Food_Quality | Measure of Quality Food in points |

| Service_Quality | Measure of quality of Service in points |

| Price | Price of meal |

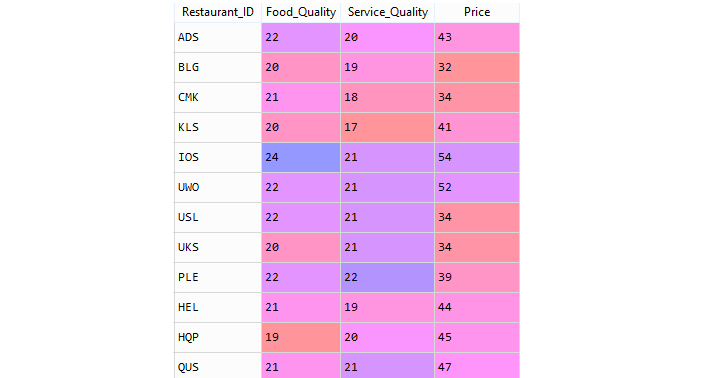

Restaurant data sample,

To access the complete source code for the Ordinary Least Squares example with explanation, click on the following link: Ordinary Least Squares Example

Key Concepts of OLS Regression

Linear Relationship

OLS assumes a linear relationship between the independent variables and the dependent variable. This means that the dependent variable is expected to change in a straight-line fashion as the independent variables change.

Minimizing Residuals

The goal of OLS is to find the values of the coefficients that minimize the sum of the squared residuals. Residuals are the differences between the observed values of the dependent variable and the predicted values based on the linear regression model.

Least Squares Fit

The OLS method identifies the line that best fits the data points, minimizing the overall error. This line is often referred to as the "least squares regression line" or the "best fit line."

Interpretable Coefficients

The coefficients obtained from OLS regression can be interpreted as the impact of each independent variable on the dependent variable. For instance, a positive coefficient indicates a positive correlation, meaning that as the independent variable increases, the dependent variable is expected to increase as well. Conversely, a negative coefficient indicates a negative correlation, suggesting that as the independent variable increases, the dependent variable is expected to decrease.

Advantages of OLS Regression

Advantages of OLS Regression lie in its simplicity, interpretability of coefficients, and robustness to outliers and moderate multicollinearity. These characteristics contribute to the widespread use of OLS in various fields, particularly when the goal is to build a transparent and easily understandable model.

Simplicity

Ease of Implementation: One of the key advantages of OLS Regression is its simplicity. The method is straightforward to understand and implement, making it accessible for both beginners and experienced data scientists. The simplicity of the algorithm facilitates quick deployment and allows practitioners to focus on the interpretability of results.Interpretability

Direct Interpretation of Coefficients: OLS Regression provides coefficients for each independent variable, and these coefficients have a direct and intuitive interpretation. For a simple linear regression model (y=mx+b), the slope (m) represents the change in the dependent variable for a one-unit change in the independent variable. This interpretability enhances the model's utility in explaining relationships between variables and informing decision-making.Robustness

Outlier Handling: OLS Regression is relatively robust to outliers, which are data points that deviate significantly from the overall pattern. While outliers can influence the model, OLS minimizes the impact by focusing on minimizing the sum of squared differences. This robustness allows the model to provide reasonable estimates even in the presence of a few extreme values.Moderate Multicollinearity Tolerance: OLS Regression can handle moderate levels of multicollinearity, which occurs when independent variables are correlated with each other. While high multicollinearity can lead to unstable coefficient estimates, OLS is resilient to moderate levels, enabling the analysis of relationships among correlated predictors.

Limitations of OLS Regression

- Linearity Assumption: OLS Regression relies on the assumption of a linear relationship between the independent variables and the dependent variable. If the relationship is not linear, OLS Regression may not be an appropriate choice.

- Multicollinearity Sensitivity: While OLS Regression can handle moderate multicollinearity, high levels can lead to unstable and unreliable results. It's important to check for multicollinearity before applying OLS Regression.

- Overfitting Risk: OLS Regression is prone to overfitting, where the model fits the training data too well but performs poorly on unseen data. Techniques like regularization can help mitigate overfitting.

Conclusion

Ordinary Least Squares (OLS) Regression is a fundamental and versatile machine learning technique for understanding linear relationships between variables and making predictions. Its simplicity, interpretability, and robustness make it a valuable tool for a wide range of applications. However, it's essential to carefully consider the assumptions of OLS Regression and address potential limitations to ensure reliable and valid results.