Machine Learning Random Forest Algorithm

Random Forest is a supervised machine learning algorithm that is used for both classification and regression tasks. It is an ensemble method, which means that it combines the predictions of multiple decision trees to make a more accurate prediction. Random Forest is a powerful algorithm that is often used for difficult problems, as it is less prone to overfitting than other machine learning algorithms.

How does Random Forest work?

Random Forest works by creating a collection of decision trees. Each decision tree is trained on a different subset of the data, and each tree is allowed to consider only a random subset of the features. This helps to prevent the trees from becoming too correlated with each other, which can lead to overfitting.

To make a prediction, Random Forest aggregates the predictions of all of the trees in the forest. For classification tasks, the majority class prediction is chosen. For regression tasks, the average of the predictions is used.

The Ensemble Concept: Strength in Unity

Random Forest operates by constructing a collection of decision trees, each trained on a different subset of the data. These trees are trained independently, introducing diversity among their predictions. By combining the predictions of these individual trees, Random Forest uses the strengths of each tree while mitigating their weaknesses.

Construction of Random Forest

The construction of a Random Forest involves two key steps:

- Bootstrap Aggreation (Bagging): Random Forest employs bagging to create subsets of the training data. Each decision tree is trained on a distinct subset, ensuring that each tree considers a different perspective of the data.

- Randomized Decision Trees: During the training of each decision tree, Random Forest randomly selects a subset of features to consider at each split. This randomness prevents any single feature from dominating the decision-making process, further enhancing the diversity among the trees.

Example of Random Forest for Classification

Suppose you want to predict whether an email is spam or not. You can use random forest to train a model on a dataset of emails labeled as spam or not spam. The model will learn to identify the features that are most relevant for predicting whether an email is spam, such as the sender, the subject line, and the content of the email. When a new email is received, the model will make a prediction based on these features.

Example of Random Forest for Regression

Suppose you want to predict the price of a house. You can use random forest to train a model on a dataset of houses with their corresponding prices. The model will learn to identify the features that are most relevant for predicting the price of a house, such as the size of the house, the number of bedrooms and bathrooms, and the location of the house. When a new house is listed for sale, the model will make a prediction of its price.

Python implementation of the Random Forest algorithm

About the Dataset

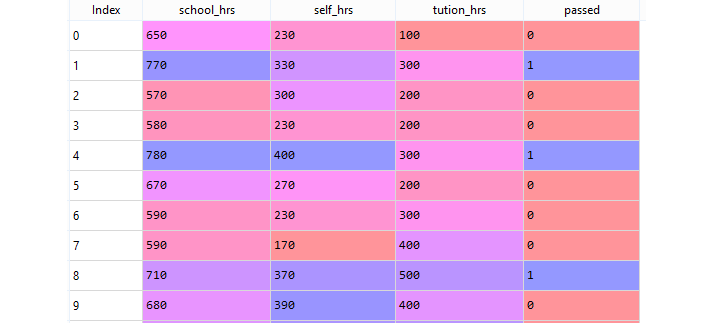

The "study_hours.csv" dataset contains information on students with four features: "school_hrs," which represents the number of hours per year the student studies at school, "self_hrs," indicating the number of hours per year the student studies at home, "tution_hrs," which represents the number of hours per year the student takes private tuition classes, and "passed," which indicates whether the student passed (1) or failed (0). This dataset can be used for various machine learning tasks, such as regression or classification, to predict the likelihood of a student passing based on their study hours at school, at home, and private tuition classes.

Sample Data from study_hours.csv :

To access the complete source code for the Python implementation of the Random Forest algorithm with explanation, click on the following link: Random Forest Example

Key Features of Random Forest

Ensemble Learning

Ensemble learning is a technique that combines the predictions of multiple models to produce a more robust and accurate prediction. In the case of Random Forest, the ensemble consists of a collection of decision trees. Each tree is trained on a different subset of the data, and the final prediction is determined by averaging the predictions of all the trees. This approach helps to reduce the overfitting that can occur when a single model is trained on the entire dataset.

Robustness to Overfitting

Overfitting is a common problem in machine learning, where a model learns the training data too well and fails to generalize to new data. Random Forest is less prone to overfitting than other machine learning algorithms, such as decision trees, because the trees in the forest are trained on different subsets of the data. This reduces the correlation between the trees, which prevents them from memorizing the training data.

Feature Importance

Random Forest provides a measure of feature importance, which indicates how much each feature contributes to the overall prediction. This information can be useful for understanding the data and selecting the most relevant features for the task. There are two main ways to calculate feature importance in Random Forest:

- Mean decrease in impurity: This measures the average decrease in impurity across all trees for each feature. Impurity is a measure of how well a split separates the data into different classes.

- Gini importance: This measures the average decrease in Gini impurity across all trees for each feature. Gini impurity is another measure of impurity that is often used in classification tasks.

Versatility

Random Forest is a versatile machine learning algorithm that can be used for both classification and regression tasks. In classification tasks, the goal is to predict the class of a new data point. For example, you might use Random Forest to classify emails as spam or not spam. In regression tasks, the goal is to predict a numerical value for a new data point. For example, you might use Random Forest to predict the price of a house.

Scalability

Random Forest can be efficiently trained on large datasets. This is because the algorithm can be parallelized, meaning that the training process can be divided up and run on multiple processors or computers. This makes Random Forest a good choice for tasks where the amount of data is large.

Conclusion

Random Forest is a powerful and versatile machine learning algorithm that has been used for a wide variety of applications. It is a popular choice for both classification and regression tasks, due to its high accuracy, robustness, and feature importance.